Scaling Content Moderation with Artificial Intelligence

Share

Don’t miss the DrupalCon Seattle session, “Hot Dog/Not Hot Dog: Artificial Intelligence with Drupal” by Rob Loach, Director of Technology at Kalamuna.

When Success Becomes a Problem

As user-generated content platforms such as forums, classified listings, and recipe sites become increasingly popular, site administrators can quickly become overwhelmed by the volume of content to be moderated. While a lot of automated spam and other inappropriate content can be screened out by CAPTCHA tests, content submitted in violation of site policy must typically be moderated by humans.

Whether the user-contributed content is reviewed by a person prior to publishing or reviewed after the fact matters significantly. Allowing users to post directly to a site risks damaging your brand’s reputation if users upload inappropriate content. Without moderation prior to publishing, there is no guarantee malicious content will be identified before offending other users.

For the most part, this sort of human moderation of content is feasible when a site is relatively small. Once an online platform finds its audience and grows larger however, it becomes increasingly unsustainable to try scaling human moderators to manage the user-contributed content.

Fortunately, where these moderation tasks become repetitive and systematic, computers and artificial intelligence may begin to provide solutions to the limits of scaling human beings.

An Intriguing Proposal

When Josh Koenig, Co-Founder & Head of Product at Pantheon approached Kalamuna CEO Andrew Mallis about integrating Google Cloud Vision with Drupal, we were intrigued. As a team of strategists, designers, and developers working on the web, we are acutely aware of the burdens that come with website management and administration. We constantly seek new ways to simplify and streamline the systems we build for our clients’ editorial staffs, especially when it comes to problems of scale. Artificial intelligence and machine learning offer so much potential in this regard, we were very eager to find a way to harness this technology within Drupal.

Initially, we were interested in exploring machine learning to automatically update content archives on large websites where ALT text was missing. This seemed like an opportunity where AI could perform large-scale, repetitive tasks that may be implausible for a person to perform. Unfortunately, we found the technology was not sufficiently reliable for this application yet.

Enter the hyperlocal news portal Patch.com and its CTO Abe Brewster. They are a longtime Pantheon partner with a clear use-case for image analysis and content moderation. Patch.com allows users to author and post local news stories that may be chosen for syndication and distribution across Patch.com’s network of “patches” (i.e. local sites) across the United States. No strangers to the risks of hosting and publishing user-generated content, Patch.com already had a robust content moderation process in place, but it was mostly manual, and relied on site members, the public, and sometimes lawyers, to flag inappropriate or copyright-protected images.

Once we understood the use case, Kalamuna and Pantheon saw the opportunity to create an AI-assisted content moderation tool for the Patch.com editorial team. By applying Kalamuna’s user-centered design practices, we felt confident we could make the editorial experience a seamless combination of Google Cloud’s AI technology and Drupal’s workflow capabilities.

Integrating Drupal and Google Cloud Vision

We wanted to explore where some of the common integration points could be made between Drupal and Google Cloud Vision, so we started on the creation of the Google Cloud Vision Drupal module. By leveraging Drupal 8’s hook system, we were able to put together a proof-of-concept demonstrating some of the API’s capabilities.

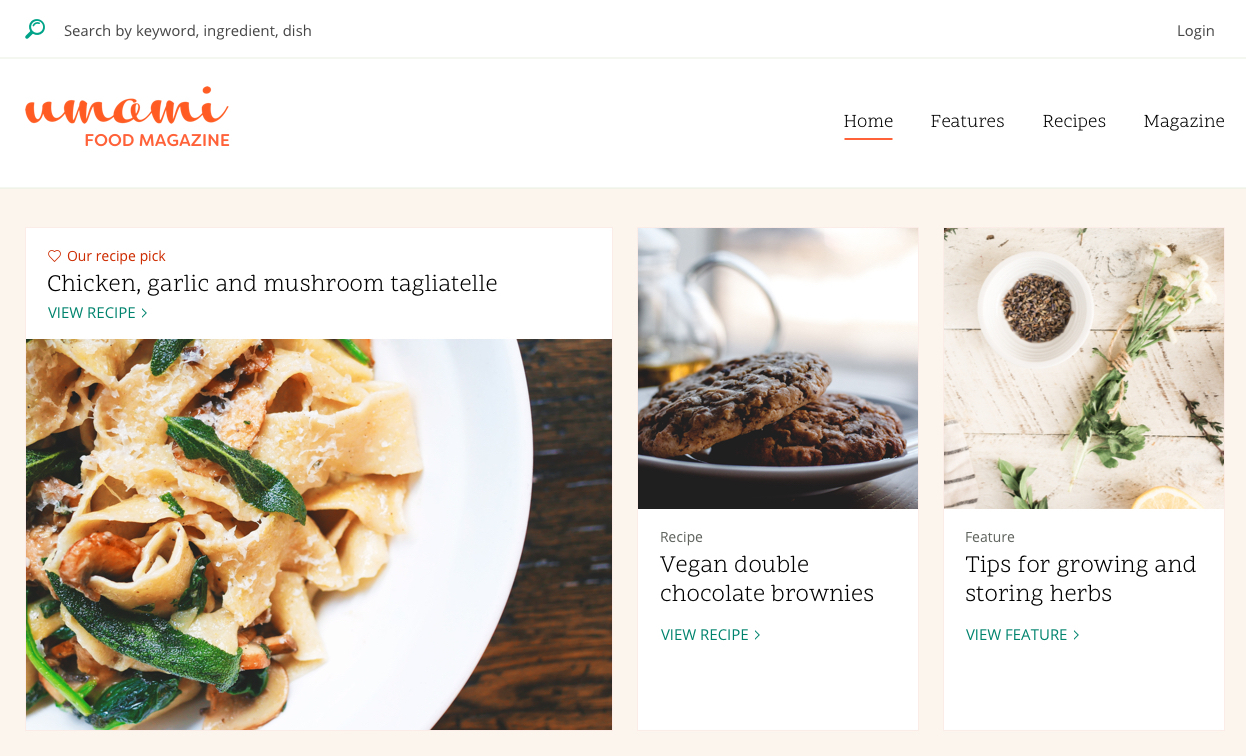

To do that we built a demonstration site using the Umami demo profile. This install profile provides a demo version of a foodie website to demonstrate some of the content workflows available in Drupal.

Umami provides users the ability to upload recipes, which seemed like a great place to ensure images that users upload don’t contain adult content. Since the images are uploaded through Drupal’s image field, we were able to create a hook to pass them through Google Cloud Vision’s SafeSearch algorithm and get a set of results we could use.

When a user uploads an image that is deemed “Racy” or “Adult” by Google Cloud Vision, it is flagged accordingly.

We accomplished the image field verification through hook_field_widget_form_alter()...

/**

* Implements hook_field_widget_form_alter().

*

* Adds Google Cloud Vision checks on the file uploads.

*

* @see https://api.drupal.org/api/drupal/core%21modules%21field%21field.api.php/function/hook_field_widget_form_alter/8.6.x

*/

function google_cloud_vision_field_widget_form_alter(&$element, FormStateInterface $form_state, $context) {

$field_definition = $context['items']->getFieldDefinition();

if (!in_array($field_definition->getType(), ['image'])) {

return;

}

$element['#upload_validators']['google_cloud_vision_validate_file'] = [];

}

/**

* File Upload Callback; Validates the given File against Google Cloud Vision.

*

* @see google_cloud_vision_field_widget_form_alter()

*/

function google_cloud_vision_validate_file(FileInterface $file) {

$errors = [];

// Retrieve the file path.

$filepath = $file->getFileUri();

$result = google_cloud_vision_image_safesearch($filepath);

// Check the results.

if ($result !== TRUE) {

$errors = $result;

}

return $errors;

}

By implementing hook_field_widget_form_alter(), we were able to check whether or not images uploaded to the site had adult content, and deny their use. But what if content editors want moderation control over the images? There could be cases where an image is deemed “Racy”, but is still acceptable for use on the site. For this, we integrated with Drupal 8’s Workflow and Content Moderation modules to give content editors a dashboard in which they could approve or deny uploaded images.

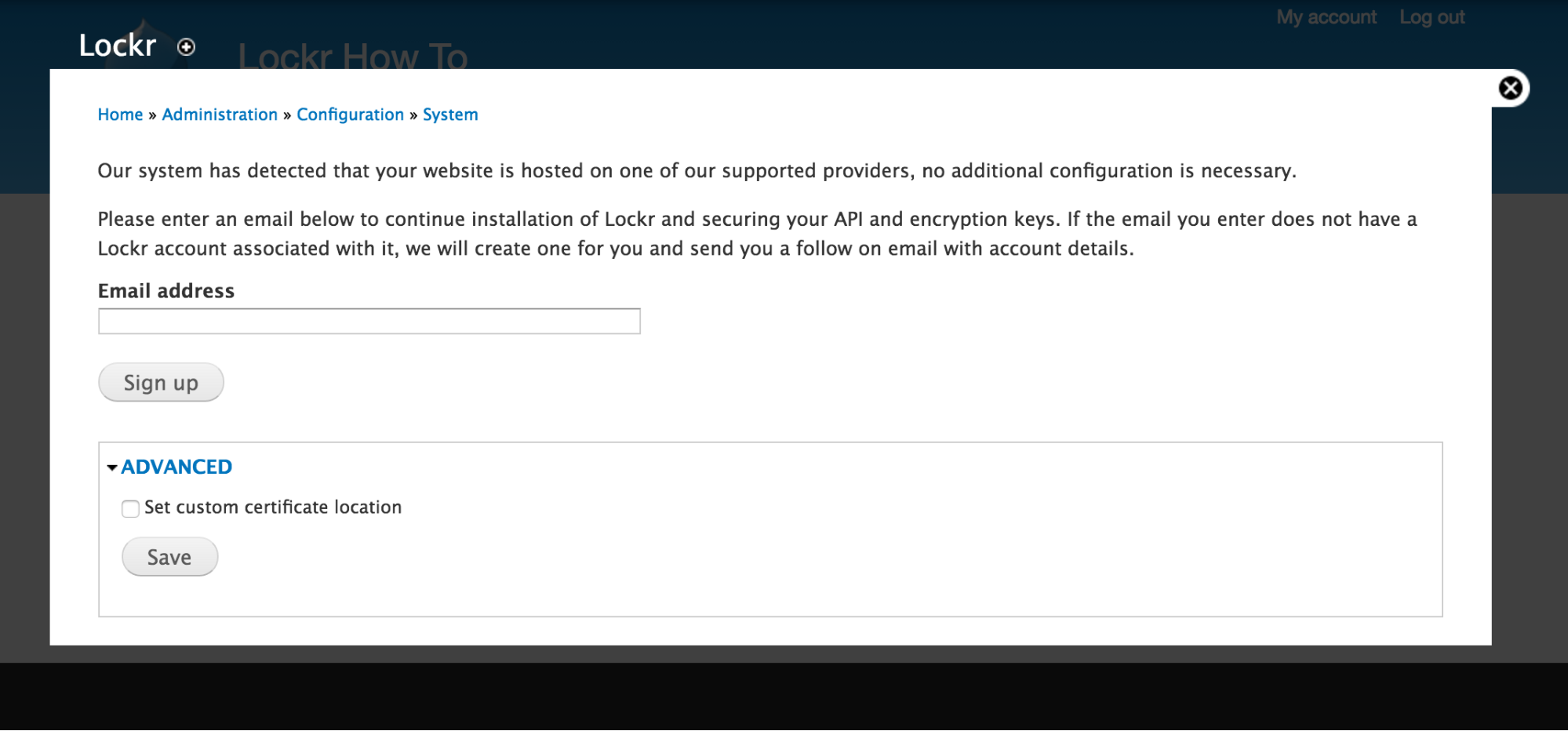

Making a Secure Open Source Module

One critical part of setting up the module was getting Drupal to communicate with Google Cloud API. We wanted the service account key to be secure and encrypted, so we used Lockr. Lockr integrates with the Drupal Key module to provide a slick API to obtain encrypted off-site keys. This allowed us to host the site on Pantheon and open source the module, with the assurance that the service account key we used would not become public.

To see more about how Lockr works, read Chris Teitzel’s Using Lockr to Secure and Manage API and Encryption Keys.

Overall, Drupal provides content editors great control over how and what content gets published on their site. Pairing it with Google Cloud Vision brings an added dimension, and offloads a lot of the work content editors would have to do to robots. For more information on how to set up the Drupal module, generate a Google Cloud API service account key, and use Google Cloud Vision on your site, see Kalamuna's Google Cloud Vision Drupal module on GitHub.

Lessons from Working with Google Cloud Vision

It wasn’t too long ago that technology like Google Cloud Vision was the stuff of science fiction. As with any cutting edge technology, we did at times hit the bleeding edge of its reliability. Like many intelligent machines, Google Cloud Vision AI has some unexpected quirks where some good ol’ fashioned human intervention is required.

Google Cloud Vision’s API is able to return a wealth of information about an image, identifying logos, faces, landmarks, handwriting, etc. We were specifically interested to tap into Google’s Safe Search Detection API to flag explicit content in any uploaded images. This is the same technology that Google uses on their own Image Search tool, which allows users to toggle “safe search” on or off when searching for images on the Web.

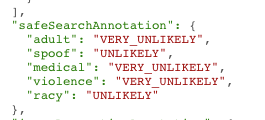

The Safe Search Detection API is able to flag an image as containing “adult”, “spoof”, “medical”, “violence”, or “racy” content. We decided that we wanted to target “adult” and “racy” content for our MVP, so that any photos containing nudity would be either rejected outright, or flagged for moderation.

So how would we know if an image should be completely blocked from being uploaded, or merely flagged for review by an editor? In addition to trying to guess if one of the above categories applies to a scanned image, the API also returns a secondary value based on likelihood.

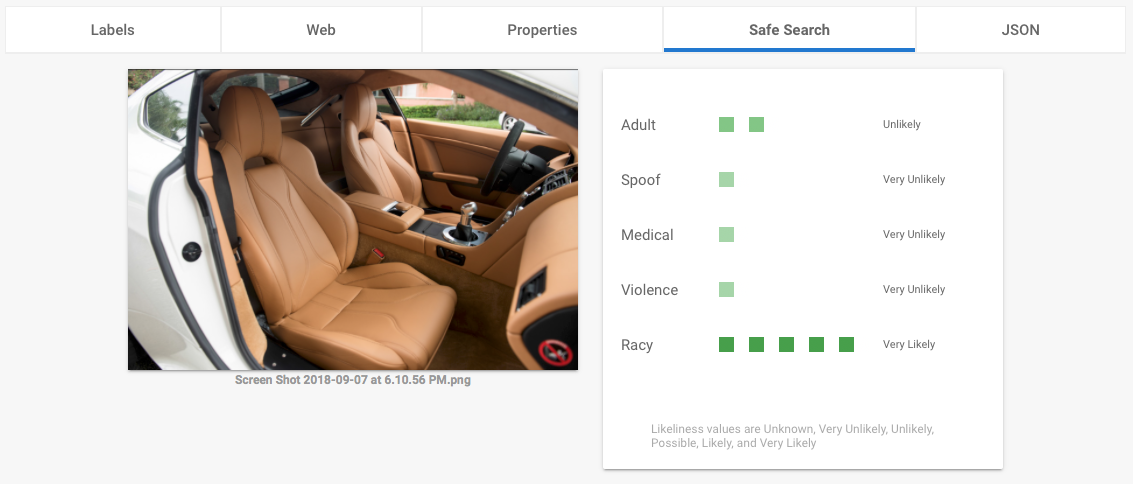

While working on this project, we learned that tan leather car seats are notoriously hard for computers to distinguish from human skin. Naturally, we had to test this for ourselves, and indeed, seats ranging in hue from beige to light brown would all come back as “racy” with a likelihood ranging from “very unlikely” all the way up to “very likely”, and some would even get flagged as “adult,” (albeit qualified as “unlikely”.)

Aware of this flaw, we realized that we would have to incorporate a degree of human moderation for any images flagged for “racy” content, and for “adult” content with an “unlikely” probability.

You can try for yourself on the Google Vision product page.

Planning for a Real-World Publishing Workflow

Throughout the proof-of-concept project we had Patch.com in mind as the end user of this toolset. After all, if we were going to develop something useful, it made sense to model our solution on a real world use case. So after figuring out how the Google Cloud Vision worked, one of the next things we needed to do was figure out how to incorporate it into a real-world publishing workflow.

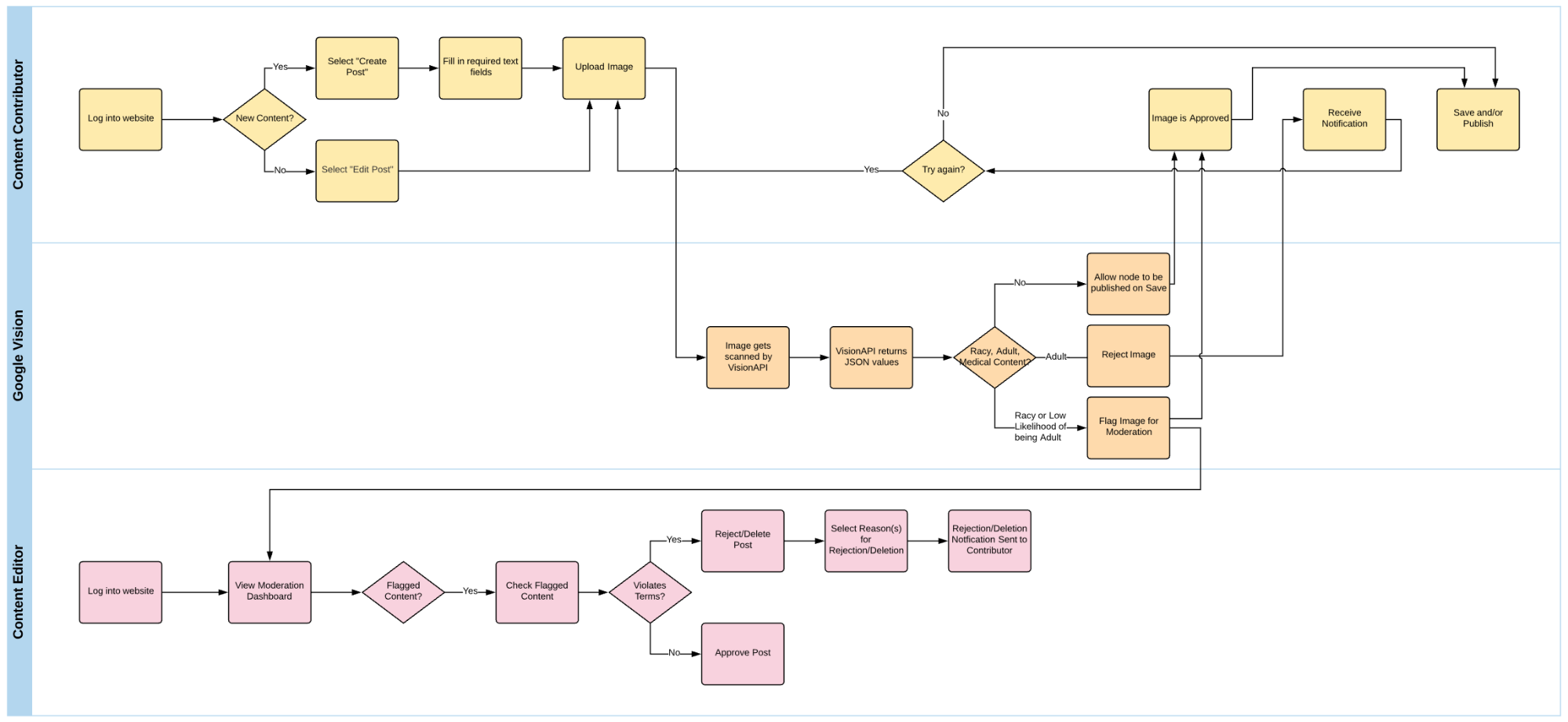

Below, you will find the workflow diagram that we designed for the editorial process. When an image is uploaded by a user, it gets processed by Google Cloud Vision (in orange). From there, the image would either be approved, rejected, or flagged for manual moderation. Considering there could be false-positives detected by Google Cloud Vision, we had to introduce a Content Editorial Moderation Dashboard to give editors control over which content is approved or rejected.

Lessons Learned: Limitations of PHP Uploads and Legal Liability Concerns

An uploaded file saved anywhere on your server – even temporarily in a file buffer – can potentially classify you as a distributor of that content. An image could contain subject matter or content that could make you liable for copyright violation or place you in serious legal jeopardy.

Drupal saves images uploaded through image fields in the “tmp” temporary directory. Theoretically, JavaScript could encrypt a base64 string of the selected file, and upload that through a POST request. Drupal would then catch the string, decrypt it, and pass the result off to Google Cloud Vision. Being able to process images before they’re saved locally was outside of the scope of this module, but we learned new lessons in how Drupal and PHP handle file uploads along the way.

Drupal 7 vs Drupal 8

As part of our proof-of-concept, we had put together both a Drupal 7 and Drupal 8 version of the module. Since Patch.com was running on Drupal 7, we had a good use case to test. There were a couple benefits that came with the Drupal 8 version, however.

- Package Management

- Drupal 8 integrates very well with the Composer package manager. This meant that we could bring down the Google Cloud Vision PHP package seamlessly through a call to composer install. We managed to do something similar in Drupal 7, but had to run through some hoops to make sure it functioned correctly.

- Content Moderation

- The new Workflow and Content Moderation modules in Drupal 8 gave content editors a great interface to approve or deny content publication flagged by SafeSearch.

To Be Continued...

Working on this project was both challenging and a lot of fun, and it definitely got us thinking about all the ways we could incorporate AI and Machine Learning into large-scale Drupal sites, and how we could improve the content moderation experience for sites like Patch.com. Helping people save time so they can focus on the things machines can’t do is fundamental to the work we do every day.

Want to learn more?

Rob Loach, Kalamuna’s Director of Technology, will be presenting a session at DrupalCon Seattle about this project: “Hot Dog/Not Hot Dog: Artificial Intelligence with Drupal”

Date: 04/11/2019

Time: 9:45 am – 10:15 am

Room: 607 | Level 6